Mobile Menu

- Education

- Research

-

Students

- High School Outreach

- Undergraduate & Beyond: Community of Support

- Current Students

- Faculty & Staff

- Alumni

- News & Events

- Giving

- About

Researchers at the University of Toronto and University of Calgary have developed an innovative approach that uses AI-driven large language models (LLMs) to streamline the screening process for systematic reviews, widely considered to be the gold standard for evidence.

They created ready-to-use prompt templates that enable researchers working in any field to use LLMs like ChatGPT to sift through published scientific articles and identify relevant ones that meet their criteria. The findings were published recently in the journal Annals of Internal Medicine.

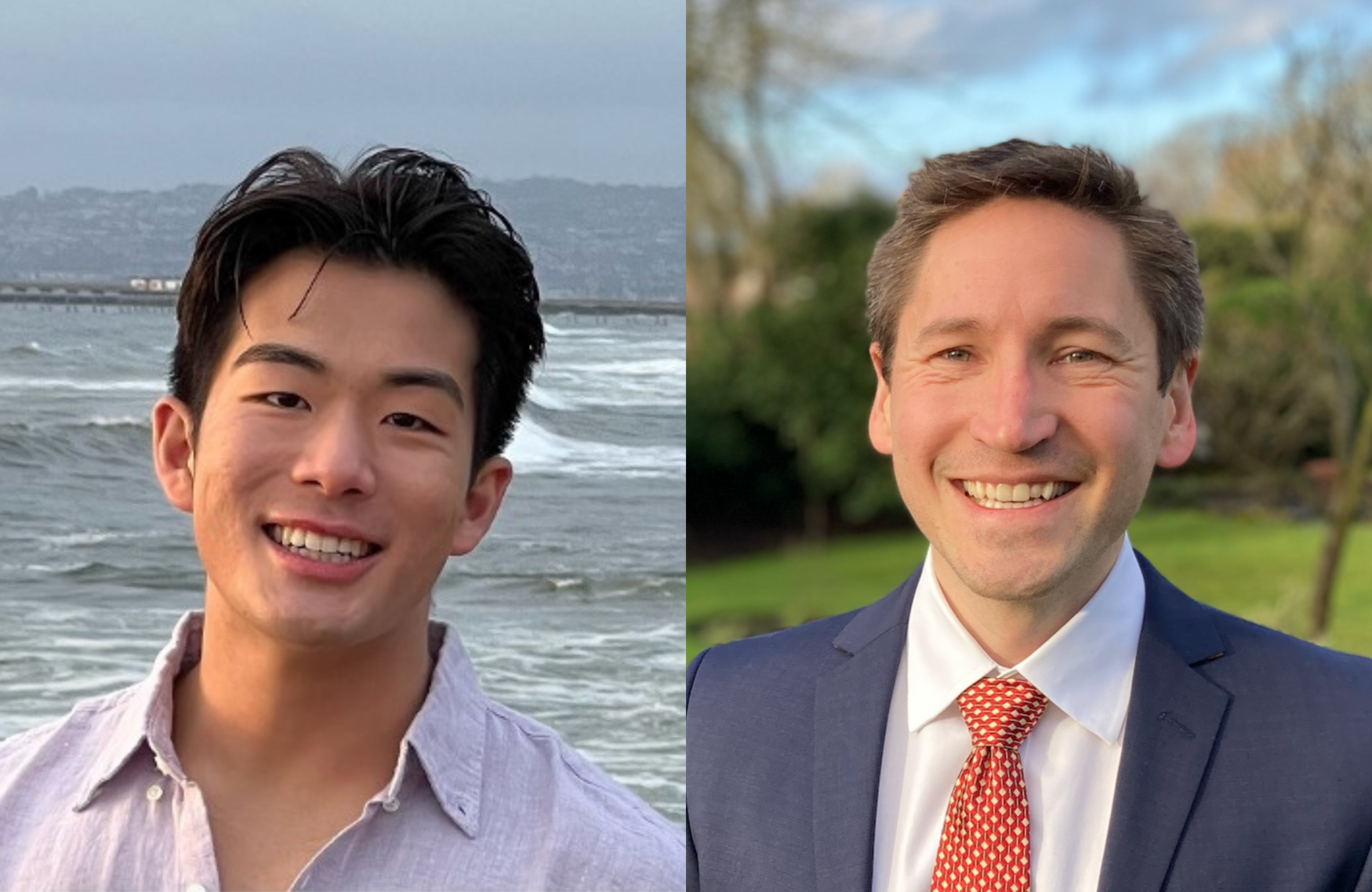

“Whenever clinicians are trying to decide which drug to administer or which treatment might be best, we rely on systematic reviews to inform our decision,” says Christian Cao, the study’s first author and a third-year medical student at U of T’s Temerty Faculty of Medicine.

To produce a high-quality review article, authors first compile all the previously published literature on a given topic. Cao notes that depending on the topic, reviewers filter through thousands to hundreds of thousands of papers to determine which studies can be included. With two human reviewers screening each study, the process is time-consuming and expensive.

“There are no truly effective automation efforts for systematic reviews. That’s where we thought we could make an impact, using these LLMs that have become exceptionally good at text classification,” says Cao, who worked with his mentors Rahul Arora and Niklas Bobrovitz, both at the University of Calgary, on the study.

To test the performance of their prompt templates, the researchers created a database of 10 published systematic reviews along with the complete set of citations and list of inclusion and exclusion criteria for each. After multiple rounds of testing, the researchers developed two key prompting innovations that significantly improved their prompts’ accuracy in identifying the correct studies.

Their first innovation was based on Chain-of-Thought, a prompting technique that instructs LLMs to think step-by-step to break down a complex problem. Cao likens it to asking someone to think out loud or walk another person through their thought process. The researchers took it one step further by developing their Framework Chain-of-Thought technique to provide more structured guidance that asks the LLMs to systematically analyze each inclusion criterion before making an overall assessment on whether a specific paper should be included.

The second innovation addressed the so-called “lost in the middle” phenomenon where LLMs can overlook key information that may be buried in the middle of lengthy documents. The researchers showed that they could overcome this challenge by placing their instructions at the beginning and end. Much like how human memory is biased towards recent events, Cao explains that repeating the prompts at the end helps the LLMs better remember what it is being asked to do.

“We used natural language statements because we really wanted the LLMs to mimic how humans would attack this problem,” he says.

By incorporating these strategies, the prompt templates scored close to 98 per cent sensitivity and 85 per cent specificity in selecting the right studies based on the abstracts alone. When asked to screen through full-length articles, the prompt templates performed similarly well with 96.5 per cent sensitivity and 91 per cent specificity.

The researchers also compared different LLMs, including several versions of OpenAI’s GPT, Anthropic’s Claude, and Google’s Gemini Pro. They found that GPT4 variations and Claude-3.5 had strong and similar performance.

In addition, the study highlights how LLMs can produce significant cost and time savings for authors. The researchers estimated that traditional screening methods using human reviewers can cost upwards of thousands of dollars in wages whereas LLM-driven screening costs roughly one-tenth of that amount. Further, LLMs can shorten the time required to screen articles from months to within 24 hours.

Cao hopes that these benefits, coupled with how easy their prompt templates are to customize and use, will encourage other researchers to integrate it into their workflows for systematic reviews. To that end, the team has made all their work freely accessible online.

“The current guidelines have no validated or recognized approach to automation and systematic reviews,” he says. “Our dream is that this work will contribute to future automation efforts and inform downstream guidelines.”

As a next step, Cao and his collaborators are working on a new LLM-driven application to facilitate data extraction, another time-consuming and labourious step in the systematic review process.

“We want to create an end-to-end solution for systematic reviews where clinical grade research answers to any medical question is just a search away.”